ETL Pipeline

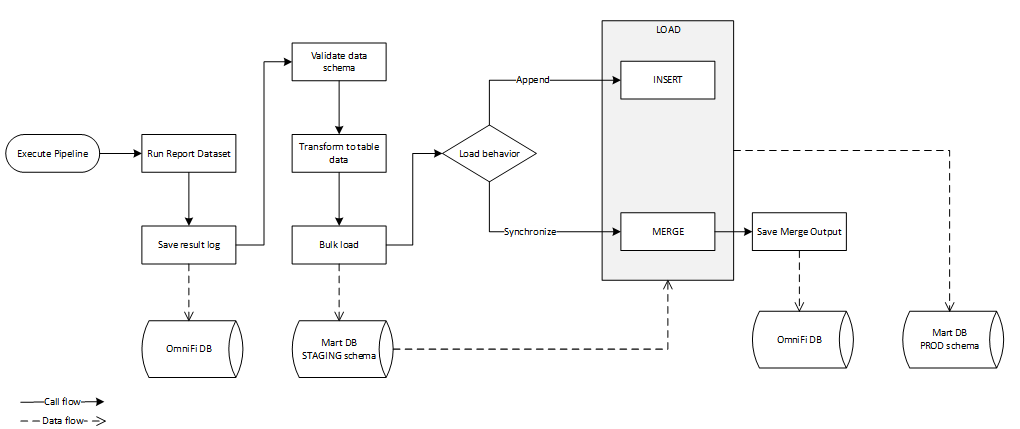

Since the OmniFi Data Mart database schema is fully denormalized, without relational constraints, the ETL pipeline jobs for each table can operate independently and concurrently.

The ETL pipeline executes in two main stages, each divided into several sub-stages. The two main stages execute in separate worker processes, where the first stage is responsible for the extract and transform operations, and the second stage is responsible for loading the data. Both stages save audit trails of the data into log files that can be reviewed directly in OmniFi Web.

The load stage bulk loads the data into the staging table and proceeds to merge or copy the data into the mart table depending on the principle configured for the model.

Note: The staging table is named the same as the mart table and located in the <schema>_stage data base schema. The staging table is cleared before bulk loading new data into it and the staging table remains populated until the next load operation.

Updated 10 months ago