Web API

The OmniFi Access Web API provides REST API access to OmniFi Web portal, including report workbooks, downloading report output, triggering report execution, downloading historical results of custom endpoints etc.

Collections

The Web API consists of a number of read-only collections providing access to various types of collateral. Many of the collections can be navigated into for further detail.

Collection | Comment |

|---|---|

custom-endpoints | The custom-endpoints collection contains the configuration for all endpoints in the custom data API. By navigating into this collection, detailed information on required parameters etc. can be obtained. |

custom-endpoint-logs | The custom-endpoint-logs collection provides log records of executions of the custom data API. It contains information on date/time of execution, success/error information, and by navigating into specific records the result output of historical executions can be retrieved. Note that historical result data can only be downloaded as long as the data has not been cleaned by the housekeeping process. |

custom-endpoint-tasks | Provides configuration information on scheduled tasks configured to execute custom data endpoints. By accessing specific tasks in this collection, it is possible to trigger executions of custom endpoint tasks. |

custom-endpoint-task-logs | Provides log records of executions of custom endpoint tasks, including date/time of execution, result etc. |

export-repositories | Export repositories are storage areas to which report executions can export result files. This collection provides details on repository configuration as well as status to determine whether the repository is on-line and healthy. |

reports | This collection contains details on all reports checked into the web portal, including several sub-entities such as required parameters, email/file layouts, permissions etc. Reports can also be triggered to execute by calling the execute RPC function. |

report-datasets | Report datasets are datasets provided by reports on which custom data endpoints are based. Through this collection details on all datasets in the web portal can be accessed, including parameters and metadata of each dataset. |

report-logs | Contains log records of report executions, including date/time and status of the execution. By navigating into specific log records result files can be downloaded, provided that they haven't been cleaned by the automatic housekeeping function. |

report-tasks | Provides configuration information on scheduled report execution tasks, including iterations, parameters and email configuration. It is also possible to trigger executions of report tasks using the execute RPC function. |

report-task-logs | Log records of report task executions, including information on provided parameters, sent emails etc. |

schedules | A collection of schedules configured in the web portal. It is possible to trigger executions of a schedule by calling the execute or execute-adhoc RPC functions. |

Permissions

Accessing API data is subject to the web portal access permissions. Collections are filtered based on access permissions, i.e. listing reports using the collection endpoint <Service URL>/api/web/reports will show return the reports accessible to the current login account.

Accessing specifically identified resources, e.g. accessing a specific report using <Service URL>/api/web/reports/42 will result in a 403 HTTP status code if the current login account doesn’t have access to that specific report.

Filters

Many collection endpoints, e.g. report-logs accept a Filter parameter that is used to request records from the collection that match specific criteria. The filter is supplied on the format Filter=<field> <operator> <value>, and multiple criteria can be combined using logical and/or statements. To list the report logs that are currently executing, the following can be used:

<Service URL>/api/web/report-logs?Filter=State eq Executing

To check for logs that are either Failed or Finished, you can combine multiple criteria using the or logical operator:

<Service URL>/api/web/report-logs?Filter=State eq Failed or State eq Finished

Supported operators:

Operator | Comment |

|---|---|

eq | Equals. Supports all data types. |

ne | Not equals. Supports all data types. |

gt | Greater than. Supports scalar data types, including numbers and dates. |

lt | Less than. Supports scalar data types, including numbers and dates. |

ge | Greater than or equals. Supports scalar data types, including numbers and dates. |

le | Less than or equals. Supports scalar data types, including numbers and dates. |

like | Like operator. Matches strings similar to the value string. The % char is a wild card character that can be used in the value, e.g. Portfolio like %FX%. |

When using the like operator, the % wild-card character must be URI encoded as %25, e.g. Portfolio like %25FX%25.

Paginated collections

Collections that grow over time and are expected to contain large amounts of data return paginated results, limiting the maximum number of records returned per call. One such collection is report-logs, calling <Service URL>/api/web/report-logs returns a paginated result structure:

Property | Comment |

|---|---|

PageId | The Id of the page. |

RecordCount | The number of records in the page. |

TotalSize | The total size of the collection. |

Records | A list of records making up the page. |

The size of page returned can be set by supplying the PageSize argument:

<Service URL>/api/web/report-logs?PageSize=500

To retrieve the next page the ReferencePageId argument is set to the PageId of the current page. To retrieve the previous page, supply a negative PageSize:

<Service URL>/api/web/report-logs?PageSize=-100&ReferencePageID=CwAAABQAAAA=

The default value of PageSize is 100

Implementing typical use cases

In this section we will provide code samples for implementing typical use-cases. Code sample are based on Python 3.6 x64, however any moderns programming language can be used to achieve the same results.

Downloading last report log file

To warm up with a simple example that can be prototyped with only a web browser. Assume we want to download the result file of the latest execution of a specific report. The report is scheduled to run in OmniFi Web with regular intervals, and once per day we want to archive the latest result file.

The name of a report is not unique, so to download the last log file you must first know the Id of the report. Assuming you know a part of the name of the report you can look up the Id from the reports collection. (Any web browser works really, but e.g. Chrome requires specific configuration to prompt for basic authentication).

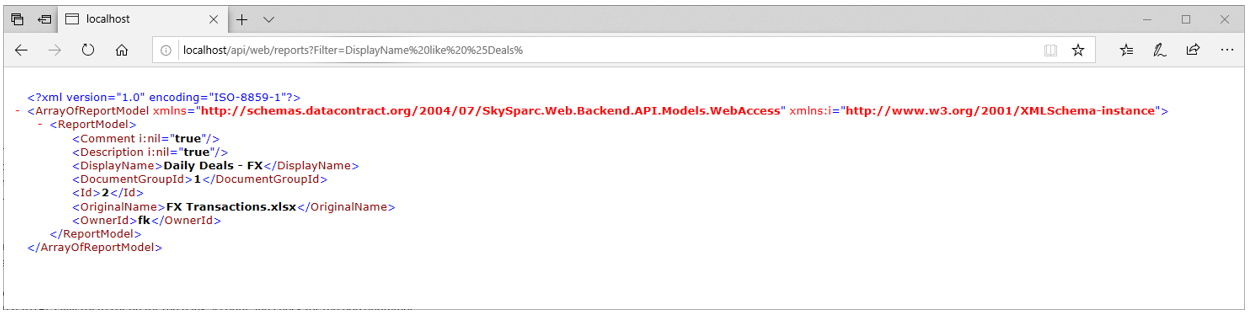

To search for reports named “something like ‘Deals’” the url <Service URL>/api/web/reports?Filter=DisplayName like %25Deals%25 (‘%’ is used as wild card character and %25 is URI encoding for the ‘%’ character. Because %De is also a valid character encoding we must encode the % character manually to %25).

Output from looking up reports named like %Deals%.

The web browser will prompt you to log in. The output when called directly from a web browser is XML by default, because we haven’t provided any Accept header. We can read that the unique Id of the report is 2.

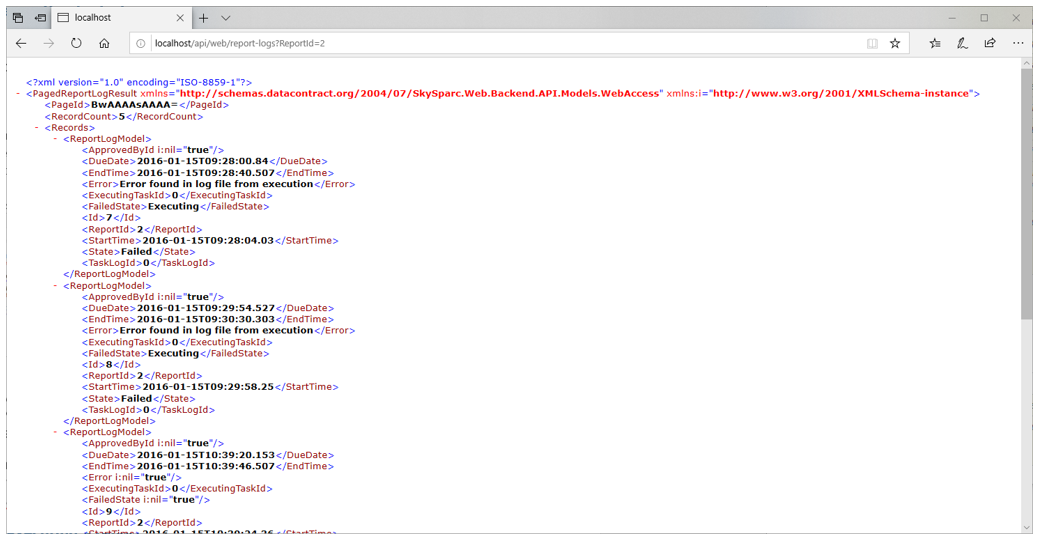

Report logs are found in the report logs collection, which accepts a number of optional parameters, where ReportId is one. By visiting the URL <Service URL>/api/web/reports-logs?ReportId=2 will provide a sample output.

Output from requesting report-logs

Report-logs is a potentially large collection and therefore paged. Only a limited number of records are returned, and the records selected are in chronological order. To navigate between pages you can provide the PageId from one page as a parameter when retrieving the next page.

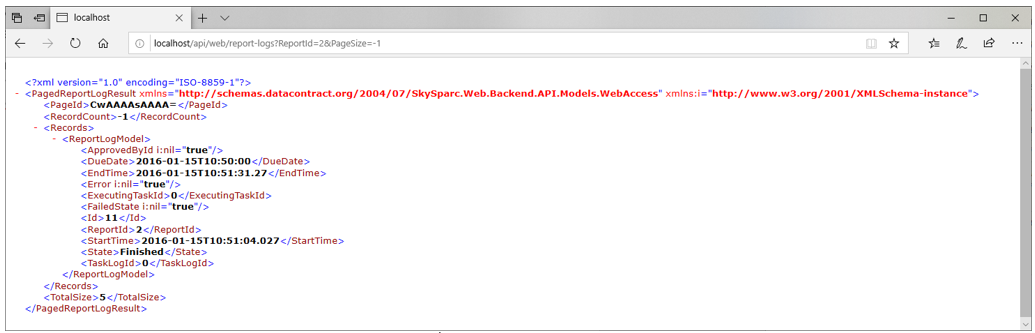

We can also control the page size via the PageSize parameter. The setting PageSize=1 returns a single record, however it will be the oldest record because the records are returned in chronological order. We can set a negative page size, though, which will invert the order, so PageSize=-1 will return the latest log:

<Service URL>/api/web/reports-logs?ReportId=2&PageSize=-1

Retrieving the last log file of a report

Since we want the output file of the last log we should make sure the log we are looking at represents a successful execution:

<Service URL>/api/web/reports-logs?ReportId=2&PageSize=-1&Filter=State eq Finished

We now know the Id of the last successful execution of the report we can use this to request download of the result file by calling <Service URL>/api/web/reports-logs/{Id}/EXCEL/download. If you prototype this call in a web browser the file will be downloaded.

We now know everything we need to know to create a Python script to automate the process. We can hard-code the report Id since it won’t change, but we still need find the last successful log and download the result file.

import http.client

import json

import cgi

# Create a connection to <Service URL>

conn = http.client.HTTPConnection("localhost", 80)

# Set up request headers

headers = {

# We use basic authentication.

# The value is the phrase user:password base-64 encoded.

'Authorization': "Basic YTph",

# Request Json data since we can parse that natively.

'Accept': "application/json",

'cache-control': "no-cache"

}

# First request is to find the Id of the last log of report #2.

# Fire off the request to api/web/report-logs and read the response body.

conn.request("GET",

"/api/web/report-logs?"

+ "ReportId=2"

+ "&PageSize=-1"

+ "&Filter=State%20eq%20Finished",

headers=headers)

res = conn.getresponse()

jsonData = res.read().decode("utf-8")

# Decode the json data as objects

page = json.loads(jsonData)

logId = page["Records"][0]["Id"]

# With the Id of the last successful log at hand we can

# download the Excel workbook file.

conn.request("GET", "/api/web/report-logs/"

+ str(logId)

+ "/files/EXCEL/download", headers=headers)

res = conn.getresponse()

# The file name is encoded into the Content-Disposition response header.

# We use cgi to parse it out.

contentDisp = res.getheader("Content-Disposition")

value, params = cgi.parse_header(contentDisp)

fileName = params["filename"]

# Create or overwrite a new file in binary mode

with open("C:/tmp/" + fileName, "wb") as file:

# Dump the content of the response body into the file

file.write(res.read())Executing a report

In this scenario we are executing a report and sending it as an email attachment to some recipient. The report simply reads a single transaction and produces a nice layout of trade details that is used for archiving. We know the report Id is 15, and the transaction number is provided as input to the script.

To execute a report we must know what parameters it accepts, and what parameters are required. This information is available from <Service URL>/api/web/reports/{Id}/parameters. The output is a collection of parameter models, pre-populated with the default values configured for the report.

import http.client

import json

import time

# Create a connection to <Service URL>

conn = http.client.HTTPConnection("localhost", 80)

# Set up request headers

headers = {

# We use basic authentication.

# The value is the phrase user:password base-64 encoded.

'Authorization': "Basic YTph",

# Request Json data since we can parse that natively.

'Accept': "application/json",

# Indicate that we will be sending Json data

'Content-Type': "application/json",

'cache-control': "no-cache"

}

# Fire off the request to retrieve the report parameters.

conn.request("GET",

"/api/web/reports"

+ "/15"

+ "/parameters",

headers=headers)

res = conn.getresponse()

jsonData = res.read().decode("utf-8")

parameters = json.loads(jsonData)

print (parameters)The output structure is:

[

{

'Id': '##{#7f822b19-0194-49d3-b603-075ba14c8bea}',

'Description':

'Transaction Number',

'Type': 'Int',

'Value': None

}

]We find that we need to provide a value for a parameter named Transaction Number. We can simply locate the Transaction Number parameter and set a value for it.

# Find the Transaction Number parameter and set a value for it

names = [param["Description"] for param in parameters]

number = names.index("Transaction Number")

parameters[number]["Value"] = 333The execute function is located on <Service URL>/api/web/reports/{Id}/execute. This does require an argument structure as input, where email options can be specified. To list what email layouts we have available we can read the endpoint <Service URL>/api/web/reports/{Id}/layouts in a web browser. In our case we find that our default layout ID is 25.

# Set up the argument structure for executing the report.

args ={

"MailLayoutId": 25,

"MailTo": "[email protected]",

"Parameters": parameters

}

# Dump the argument structure to Json format

jsonArgs = json.dumps(args)

Now we can trigger the report to execute:

# Send the execution request

conn.request("POST",

"/api/web/reports"

+ "/15"

+ "/execute",

body=jsonArgs,

headers=headers)

# Parse and print the response

res = conn.getresponse()

jsonResponse = res.read().decode("utf-8")

logRecord = json.loads(jsonResponse)

print (logRecord)The request returns a report log record:

{

'Id': 312,

'ReportId': 15,

'StartTime': '2019-05-06T08:47:39.5881628Z',

'DueDate': '2019-05-06T08:47:39.5881628Z',

'EndTime': None,

'Error': None,

'ApprovedById': None,

'FailedState': None,

'State': 'Executing',

'ExecutingTaskId': 3018,

'TaskLogId': 0

}Note that the log is still in state Executing. Because some report executions may be long-running and cause the HTTP call to time out, the execute call is asynchronous and will return as soon as the report has started executing, not when it is finished executing. If we want to track the executing we can do so in the report-logs collection using the Id returned from the execute call.

while executionState != "Finished" and executionState != "Failed":

# Sleep 10s with each iteration so we don't shoot off too many requests

time.sleep(10)

conn.request("GET",

"/api/web/report-logs"

+ "/" + str(executingLogId),

headers=headers)

# Parse and print the response

res = conn.getresponse()

jsonResponse = res.read().decode("utf-8")

logRecord = json.loads(jsonResponse)

executionState = logRecord["State"]

print("State: " + executionState)

if executionState == "Finished":

print("Execution finished successfully!")

else:

print("Execution failed!")The script is looping a request to report-logs to track the status of the execution, reacting to the final states Finished and Failed accordingly.

State: Executing

State: Executing

State: GeneratingEmails

State: WaitingForMailing

State: SendingEmails

State: Finished

Execution finished successfully!Updated 10 months ago