Import and Database Module Interfaces

Import Interfaces

Import interfaces are used to import data into Wallstreet Suite, using ComKIT to insert static and operational data. The advantage of using ComKIT for importing is that the Wallstreet Suite business logic applies in the same way as when entering data in the corresponding editor or board. This significantly decreases the risk of automatic imports.

Import interfaces support the import and modification of transactions, static data (such as clients and portfolios) as well as a variety of other entities such as call-money/call-account, settlements, and many more.

Data Definition

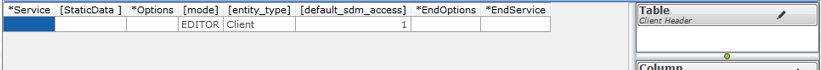

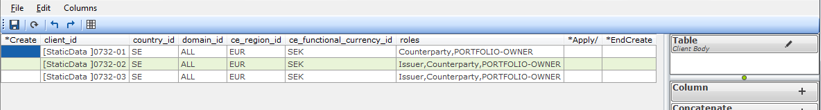

An import interface uses the Data Processing function of OmniFi to import table data (see Data Processing. It must define a minimum of two reports, one for the service header, to indicate what service should be used, and how that should be configured.

The service header can be followed by any number of import blocks, creating, removing or otherwise modifying the entity that the service header specifies.

An interface can consist of any number of service headers, nested with any number of import blocks.

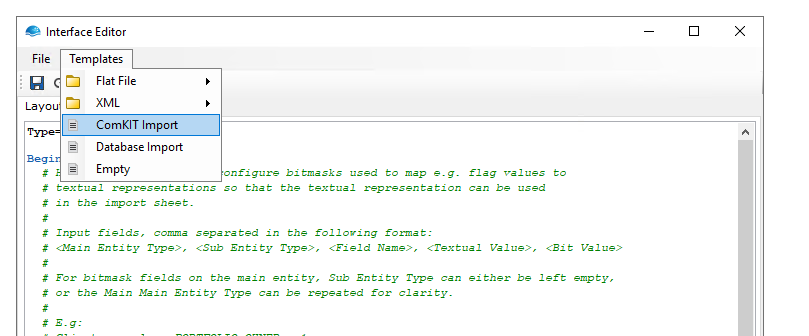

To create an import interface from existing reports, select the ComKIT Import option from the Interface Editor Templates menu.

Import Definition

The import definition file looks similar to the file interface definition. Each report is linked to a section defined within a BeginSection/EndSection- block. Each service header and import block is defined in a block, in the order in which they should be executed.

There are two options specific for sections used in import interfaces:

- HaltOnError (True or False) specifies whether execution should completely stop on any error. If set to False, the import will attempt to continue on the next row when an error occurs.

- HaltOnWarning (True or False) specifies whether execution should stop on warnings. A warning is typically raised if one creates a transaction with no counterparty specified. The system would then warn that the transaction has been created with the default counterparty ANON. If set to False, the import will attempt to continue on the next row when a warning occurs.

The Bitmasks block is specific for import interfaces, which allows configuration of bitmask lookups. It is possible to define textual representations of bitmasks used by the system. One example is client roles, as shown in the example configuration below. Multiple rows can be described comma separated, instead of as the bitmask number equivalent.

Type= Import

BeginBitmasks

# Here it is possible to configure bitmasks used to map e.g. flag values to

# textual representations so that the textual representation can be used

# in the import sheet.

#

# Input fields, comma separated in the following format:

# <Main Entity Type>, <Sub Entity Type>, <Field Name>, <Textual Value>, <Bit Value>

#

# For bitmask fields on the main entity, Sub Entity Type can either be left empty,

# or the Main Main Entity Type can be repeated for clarity.

#

# E.g:

# Client, , roles, PORTFOLIO-OWNER, 1

# Client, , roles, COUNTERPARTY, 2

# Client, , roles, ISSUER, 4

# Client, , roles, BANK, 8

# Client, , roles, RATING-SERVICE, 16

# Client, , flags, BALANCE-FROM-PAYMENTS-P, 2

# Client, , flags, CLEAR-LIMIT-VIOLATIONS-P, 4

# Client, , flags, MARK-LIMIT-APPROVALS-P, 8

# Client, ClientAccount, flags, NO-BALANCE-P, 1

# Client, ClientAccount, flags, MULTI-CCY-P, 2

EndBitmasks

# ComKIT Import (TradeReport)--------------------------------------------------

BeginSection

Data= TradeReport

# Setting HaltOnError to true will cause the interface to stop on any import error.

HaltOnError= True

# Setting HaltOnWarning to true will cause the interface to stop on any import warning.

HaltOnWarning= True

EndSectionDatabase Module Interfaces

OmniFi supports direct importing into databases using interfaces. This is useful for example in automatic population of aggregation databases and data warehouses.

The database inserts are performed using a database module, as used with Query Module data source (see Database Query Modules for more details) . Each module is specifically tailored for the task, and can be audited for functional and security requirements.

Data Definition

Since the import is performed by a database module, the module defines what data needs to be included in the dataset. Each column in the dataset is mapped to a parameter in the selected module statement based on type and header.

It is necessary to create the interface report with unique column headers.

Import Definition

Below is an example of a database import interface definition.

Type= DbModule

# Database Import (Rate report)-----------------------------------

BeginSection

Data= Rate report

# The alias for the target datasource (System DSN). (If the datasource doesn't have an explicit alias configured, use DSN name instead).

DsnAlias= Default Dsn

Module= Demo

Statement= Import Prices

BatchSize= 15

# Setting HaltOnError causes the interface to stop executing when an import error is detected generating the SQL code for a batch.

# SQL errors themselves are handled by the database engine (usually yielding a roll-back), but HaltOnError determines if the

# interface should continue with the next batch or stop.

HaltOnError= True

EndSectionThere are five options specific for sections used in database module interfaces:

- DsnAlias -Alias of the target database, as configured in OmniFi Admin. If no alias is configured, use the system DSN name.

- Module - The name of the module to use for importing. (This is the directory name under odbc\modules).

- Statement - The specific module statement to use for importing. (This is the name of the xml file inside the module directory).

- BatchSize (1-100000) - The number of rows to import per batch. Inserts will be performed in batches for performance. With this setting it is possible to optimize the performance by adjusting the size of each batch of inserts. Reasonable values are 10-500.

- HaltOnError (True/False) - Defines how errors generating the SQL code for a batch should be handled. This typically occurs if a mandatory field has no value due to missing source data. SQL execution errors are handled by the database engine.

Updated 10 months ago